Rendering Normalized Object Coordinates in Blender 3.3

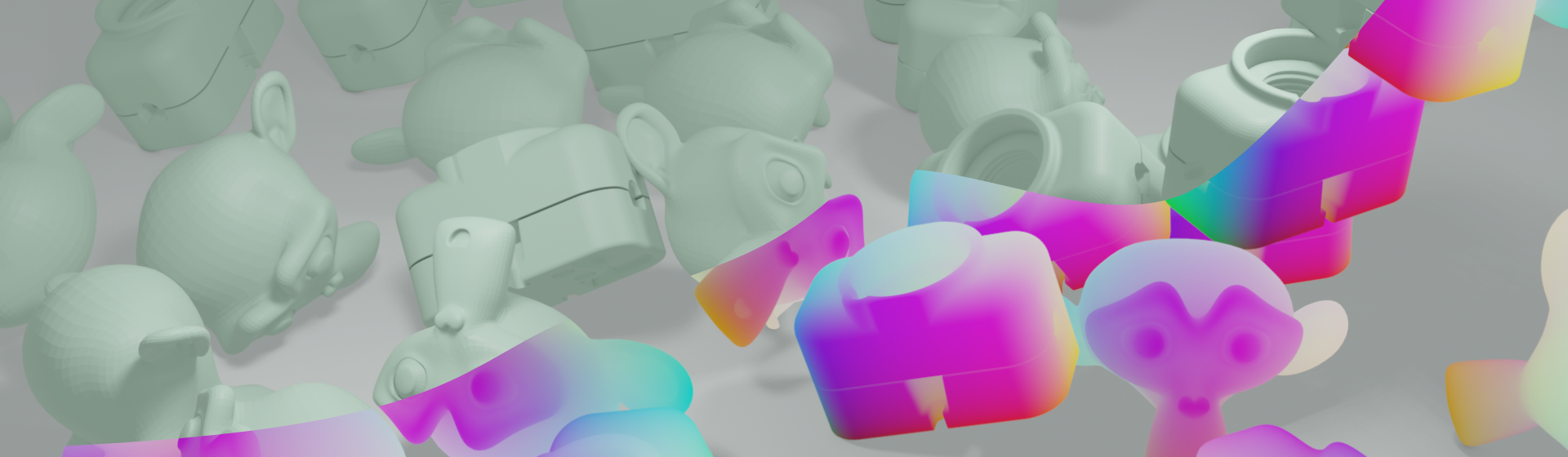

As methods for estimating 6D poses from images gather momentum, "Normalized Object Coordinates" have entered the arena. These are pixel-wise encodings of the object position. In that sense, they are like a normal map, but instead of encoding the normal at every point, they encode the position.

How can we make a material in blender that would robustly visualize these "NOC"s?

Blender provides the object coordinates in the Shader Editor via the Geometry Node (World Space), or the Texture Coordinate Node (Object Space). However, when we try to use these object coordinates, we'll see this unsightely mess:

This happens because the coordinates are relative to the object origin. However, for the NOCs, we don't want them relative to the origin, because the origin might be anywhere. We want them relative to the geometric bounds! And on top of that, we want it to be automatic, so we don't have to touch any parameters (like an offset) for each individual mesh.

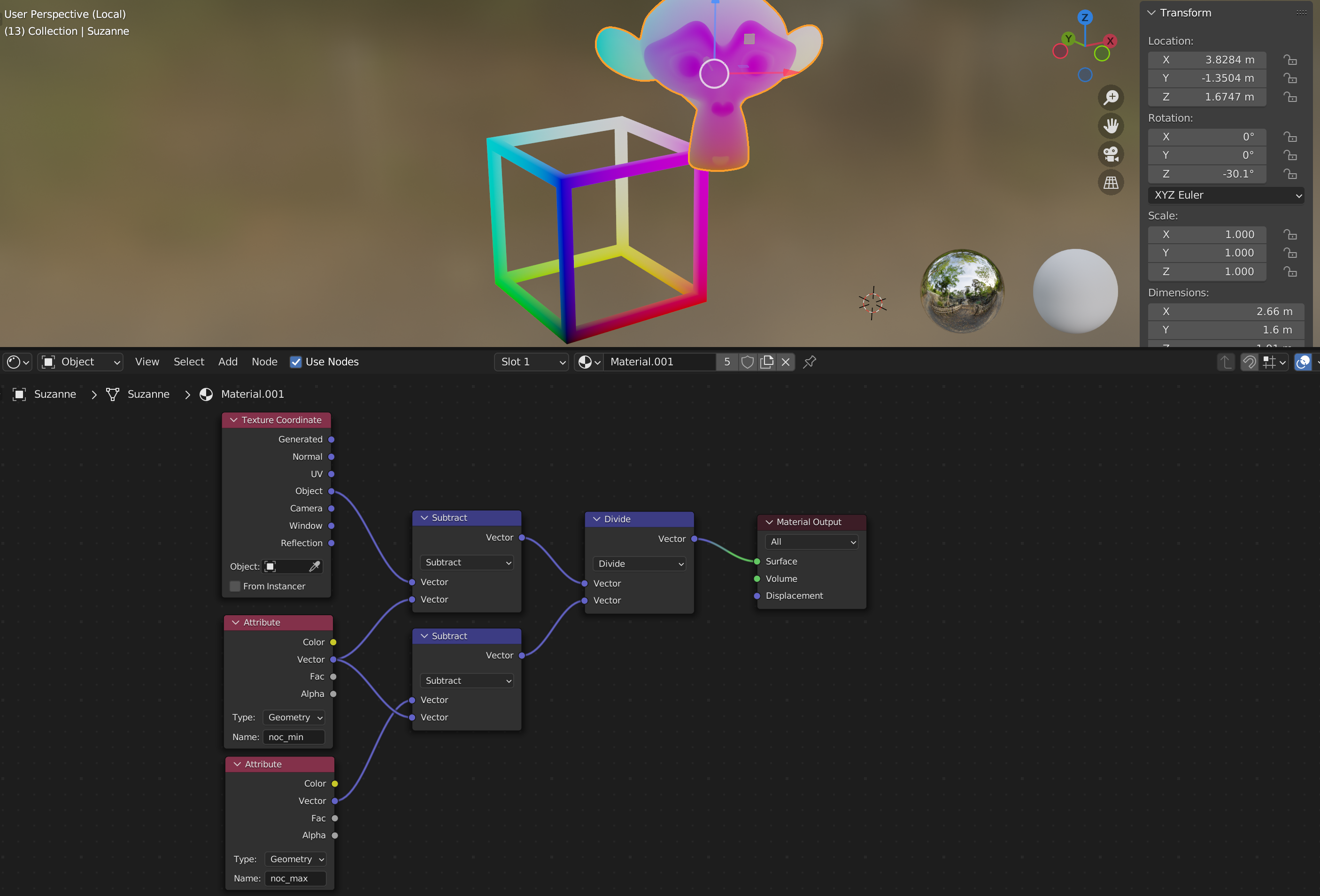

Now how do we do this with the Shader Editor?

By also using the Geometry Node Editor! In my experimentation, I didn't find a way to do this purely with shading nodes. The crux is getting access to the vertex positions, and being able to take the minimum over all vertices. The shader nodes process each pixel in parallel, there is no cross-pixel dataflow as far as I can tell.

So to still be able to have a general NOC-material, we also have to setup some geometry nodes.

The geometry nodes, that were introduced in Blender 2.92 allow us to

1) Access all of the geometry information 2) Compute statistics on this information 3) Encode these statistics in custom attributes

So the basic workflow looks like this: First, we find the the object boundaries (Geometry Nodes) relative to the object origin. Second, we use this information in the Shader Editor to offset the object coordinates.

To get the geometry bounds, we use the Attribute Statistics on the "position" attribute of whatever geometry is given. Then we use the Store Named Attribute node to encode this information onto the object. Don't forget to enable the geometry nodes with the object by setting it in the Modifiers panel.

In the Shader Editor, we can now access these attributes via the Attribute node. Now it's just a matter of subtracting the min and diving by the max, and we have ourselves steaming hot NOCs from [0,1].

cheers,

max